Core Idea

When solving complex problems, humans often first think about “what approach should I use to solve this problem” before actually taking action. This meta-cognition ability is key to efficient problem-solving.

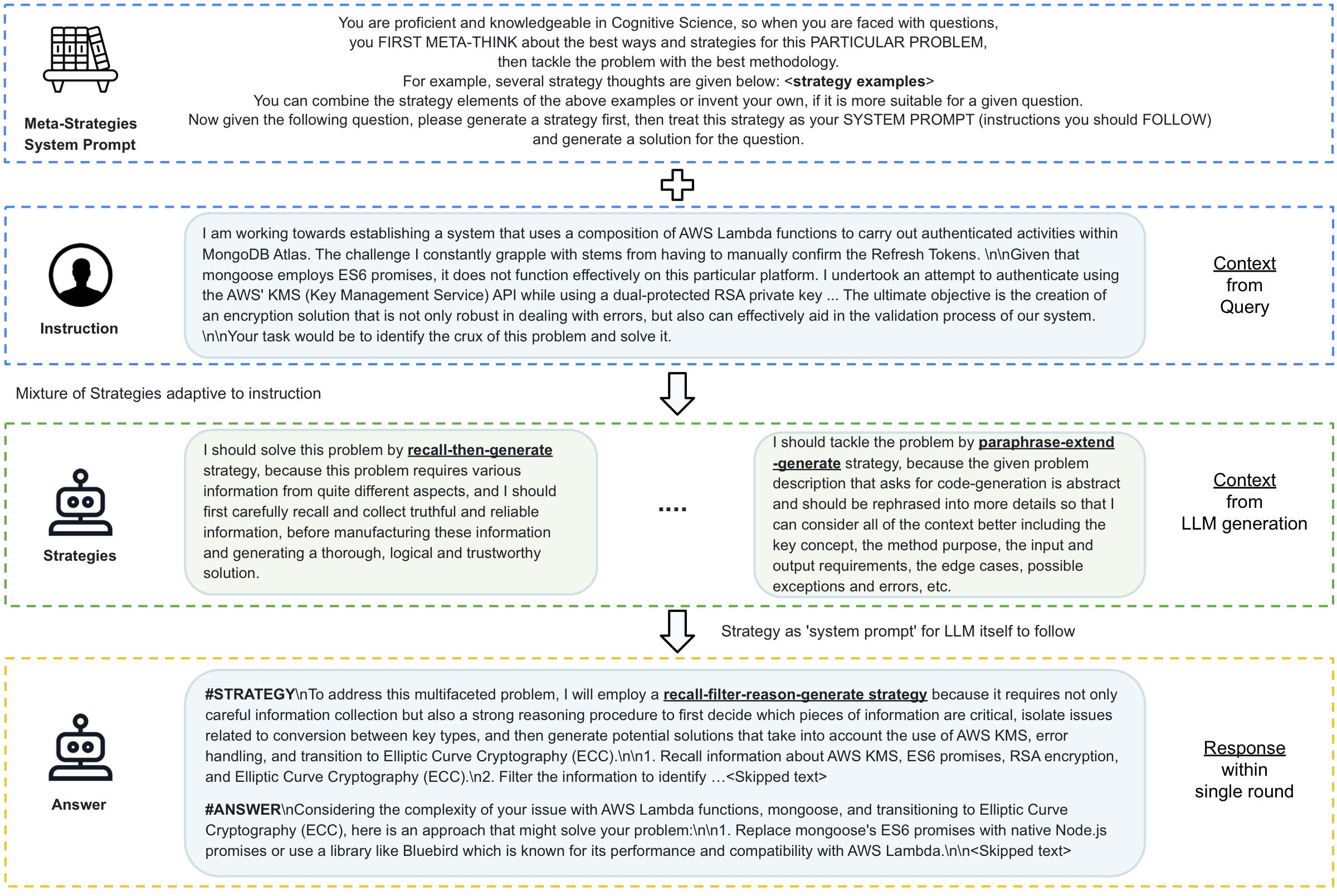

Mixture of Strategies (MoS) introduces this meta-thinking capability into LLMs: before answering a question, the model first generates a “Strategy Prompt” for itself as a guideline for solving the current problem.

Background & Motivation

The Problem: Code Generation ≠ Freeform QA

In 2023, Code LLMs like Code Llama, StarCoder, and WizardCoder demonstrated impressive capabilities in text-to-code (converting instructions into executable code) and code-to-code (refactoring existing code) tasks. However, a critical observation emerged:

Excellence in code generation does not inherently equate to comparable adeptness in freeform QA scenarios.

Freeform code QA—answering diverse, open-ended programming questions like debugging advice, API usage explanations, or architectural recommendations—remained a significant challenge for open-source Code LLMs. This gap motivated the search for methods that could harmonize both capabilities within a single model.

Prior Art: Fixed Strategies for Fixed Tasks

Before MoS, several prompting strategies had been proposed to enhance LLM reasoning:

| Work | Strategy | Approach |

|---|---|---|

System 2 Attention (Weston et al., 2023) | Regenerate | Regenerate input context to focus on pertinent information before responding |

ChatCoder (Wang et al., 2023) | Paraphrase-and-Extend | Encourage LLMs to paraphrase and extend context for accurate generation |

Orca (Mukherjee et al., 2023) | Step-by-Step | Break down reasoning into sequential steps |

Orca 2 (Mitra et al., 2023) | Recall-then-Generate, Recall-Reason-Generate | First recall relevant information, then reason and generate |

The critical limitation: These approaches hard-coded each strategy to a specific task type. A step-by-step strategy was always used for math; a recall-then-generate strategy was always used for knowledge-intensive questions. There was no mechanism for the model to dynamically choose the most appropriate strategy.

The Key Insight: Let LLMs Choose Their Own Strategy

The breakthrough idea behind MoS came from a simple observation about human cognition:

Experts don’t apply the same problem-solving approach to every problem. They first assess the problem, then select an appropriate methodology.

This led to two key innovations:

- Adaptive Strategy Selection: Instead of hard-coding strategies to tasks, let the LLM decide which strategy best fits each specific question

- Strategy Composition: Allow the LLM to combine “atomic elements” from existing strategies or even invent entirely new strategies when none of the existing ones fit

Why This Mattered in 2023 (Pre-Thinking Models)

It’s important to note that this work predates the emergence of “thinking models” like o1. In 2023, the standard paradigm was:

- System prompt → fixed, human-designed

- User query → variable

- Response → direct answer

MoS introduced an intermediate step that was novel at the time:

- Meta system prompt → instructs the model to think about strategy first

- User query → variable

- Strategy selection → model-generated “self system prompt”

- Response → answer following the self-selected strategy

This effectively gave LLMs a form of meta-cognitive scaffolding—the ability to reason about how to reason—within the constraints of single-turn inference. The approach can be seen as an early precursor to the explicit “thinking” capabilities that would later be built into models like o1.

Method Overview

Meta-Strategies System Prompt

The core of MoS is a Meta-Strategies System Prompt that instructs the LLM:

“You are proficient in Cognitive Science. When facing questions, you should first meta-think to select the most appropriate strategy for the current problem, then solve the problem following that strategy.”

The specific Meta System Prompt structure is as follows:

so when you are faced with questions, you FIRST META-THINK

about the best ways and strategies for this

PARTICULAR PROBLEM,

then tackle the problem with the best methodology.

For example, several strategy thoughts are given below:

<strategy examples>

You can combine the strategy elements of the above examples

or invent your own, if it is more suitable for a given question.

Now given the following question, please generate a strategy first,

then treat this strategy as your SYSTEM PROMPT (instructions you

should FOLLOW) and generate a solution for the question.

Strategy Library (Strategy Examples)

MoS provides 6 base strategies that the LLM can select, combine, or create new ones from:

| Strategy Name | Use Case | Description |

|---|---|---|

| Step-by-Step | Mathematical calculations, logical reasoning | Break down problems step by step, since LLMs aren’t good at complex calculations in one shot, but can handle simple single-step calculations |

| Recall-then-Generate | Problems requiring multi-domain knowledge | First carefully recall and collect reliable information, then synthesize this information to generate a thorough, logical, and trustworthy solution |

| Recall-Filter-Reason-Generate | Information overload, filtering required | Not only collect information, but also reason about which pieces are useful vs. redundant or interfering, then generate answers based on useful information only |

| Generate-Examine-Regenerate | Code generation, complex programming problems | First generate a draft, then examine, debug, and repair to finally produce a correct solution |

| Paraphrase-Extend-Generate | Abstract code requirement descriptions | Rephrase abstract problems into more detailed descriptions, considering key concepts, method purpose, input/output requirements, edge cases, possible exceptions, etc. |

| Custom Combination | Complex multi-faceted problems | Combine elements from above strategies, or invent new strategies based on specific problems |

Workflow

The MoS method workflow consists of three phases:

1. Input Phase

- Meta-Strategies System Prompt (fixed)

- User’s specific question (Instruction)

2. Strategy Selection Phase

- LLM analyzes problem characteristics

- Selects the most appropriate strategy from the library, or combines/creates new ones

- Uses the selected strategy as a “self-guiding System Prompt”

3. Answer Generation Phase

- LLM follows its self-selected strategy

- Generates structured response:

#STRATEGY+#ANSWER

Output Format Example

🧠 #STRATEGY

To address this multifaceted problem, I will employ a

recall-filter-reason-generate strategy because it requires

not only careful information collection but also a strong

reasoning procedure to first decide which pieces of information

are critical…

1. Recall information about [relevant topics]

2. Filter the information to identify [key points]

3. Reason about [the core problem]

4. Generate [the solution]

✅ #ANSWER

Considering the complexity of your issue…

[Concrete solution]

Key Innovations

1. Strategy as “Self System Prompt”

In traditional approaches, system prompts are designed by humans and remain fixed. MoS allows LLMs to generate task-specific system prompts for themselves, achieving dynamic adaptation to different problem types.

2. Single-Round Achieves Multi-Round Depth

MoS enables standard LLMs to simulate the depth and nuance typically associated with multi-round reflective interactions of an LLM-Agent, but within a single response cycle, making it more efficient.

3. SFT Data Construction

During instruction fine-tuning, GPT-4 is used to generate training data in MoS format:

- Input: Question + Meta System Prompt

- Output: Strategy selection + Final answer

This allows the fine-tuned model to internalize meta-thinking capability.

Experimental Validation

Ablation Study: Effect of Meta System Prompt

| SFT Data | Inference System Prompt | FreeformQA Performance |

|---|---|---|

| GPT4 answer (no strategy) | Normal | 42.42% |

| GPT4-MT-HideStrategy | Normal | 42.19% |

| GPT4-MT-HideStrategy | Meta Think + parse strategy | 37.59% |

| GPT4-MT-keepStrategy | Meta Think + parse strategy | 48.98% |

Key findings:

- Training with strategy retention (keepStrategy) + Meta Think at inference = best performance

- Using Meta Think only at inference without strategy in training data actually degrades performance

Ablation Study: Effect of Number of Strategies

Number of Strategies | FreeformQA Performance |

|---|---|

| 0 (no strategy) | 42.42% |

| 1 | 45.90% |

| 2 | 45.70% |

| 3 | 45.32% |

| 4 | 43.41% |

| 5 | 46.01% |

| 6 (all) | 48.98% |

Key findings:

- More strategy choices generally lead to better performance

- Strategy diversity allows the model to select optimal methods for different problem types

Main Results

On InfiCoder-Eval (Freeform Code QA) benchmark:

| Model | Parameters | Score |

|---|---|---|

| GPT-3.5 (ChatGPT) | - | 47.0 |

| GPT-4 (0613) | - | 64.0 |

| Code Llama - Instruct | 13B | 38.7 |

| StarCoder Prompted | 15.5B | 40.8 |

| InfiCoder-1 (MoSE) | 13B | 51.3 |

InfiCoder-1 using the MoSE method surpasses GPT-3.5 with only 13B parameters and significantly outperforms open-source models of similar size.

Practical Implications

1. Prompt Engineering Level

When using LLMs, you can directly apply the MoS concept:

Before answering, think about the best strategy for this problem:

- Is it a step-by-step reasoning problem?

- Do I need to recall and filter information?

- Should I generate, examine, and regenerate?

First output your strategy, then solve the problem following that strategy.

"""

2. Model Training Level

When constructing SFT data:

- Have a strong model (e.g., GPT-4) generate strategy + answer for each question

- Retain the strategy selection process as part of the training data

- Use Meta System Prompt at inference as well

3. Agent Design Level

The MoS concept can be extended to Agent systems:

- Introduce meta-strategy selection during task planning phase

- Dynamically select execution strategies based on task type

- Allow Agents to combine or create new problem-solving methods

Summary

The core contribution of Mixture of Strategies is formalizing and injecting human meta-cognitive ability into LLMs:

- Meta-thinking first: Let LLMs think about “how to solve” before solving problems

- Strategy adaptation: Dynamically select optimal strategies based on problem type

- Single-round deep reasoning: Achieve multi-round reflection effects within a single response

- Trainability: Internalize meta-thinking capability through SFT

This approach not only improves Code LLM performance on Freeform QA tasks, but more importantly provides a general framework for teaching LLMs to “think about how to think”.

This article summarizes the Mixture of Strategies component from an internal project called MoSE (Mixture of Strategies and Experts), developed in late 2023.